Let’s not sugarcoat it, the digital mental health space has been a mess. A gold rush of apps and AI bots promising self-care and emotional support, but barely anyone is checking what they’re actually doing. That’s about to change.

Here’s what’s coming in digital mental health regulations across the US, UK, EU, India, and beyond. If you’re building, using, or trusting an AI therapy tool, read this like your emotional safety depends on it, because it kind of does.

🧠Fact: In 2023, the WHO released updated guidelines highlighting the ethical concerns around using AI for mental health support, emphasising data privacy, clinical validation, and informed consent. These frameworks are expected to influence digital mental health regulations globally.

Why digital mental health regulations Is Finally Catching Up?

- Explosion of AI therapy apps: More people are relying on digital tools for emotional support, especially post-COVID.

- Data privacy nightmares: From sharing mood logs to tracking intimate thoughts, apps have been caught mishandling data.

- Mental health malpractice: A few bots gave dangerously bad advice, pushing regulators to take action.

Region-Wise Breakdown of 2025–26 Digital Mental Health Laws

USA

- The FDA and state laws are stepping in.

- California’s AB 331 mandates that users must be told when they’re speaking to a chatbot. If it impersonates a human therapist, it’s illegal.

- The FTC is tightening its grip on how mental health apps collect, store, and monetise user data.

UK & EU

- The AI Act in the EU classifies AI mental health tools as “high-risk” tech.

- MHRA now treats many therapy apps like medical devices, with all the accountability that comes with that.

- GDPR enforcement is getting sharper around mental health data.

India

- The DPDP (Digital Personal Data Protection) Act is coming into effect.

- Mental health data is considered sensitive, and apps must ensure consent, clarity, and purpose limitation.

- The CDSCO may classify more wellness apps under medical device regulation soon.

🧠Fact: The European Union is finalising its Artificial Intelligence Act, which will categorise AI systems, including mental health chatbots, based on risk levels. High-risk applications like those affecting psychological well-being will face stricter certification and transparency standards starting in 2025.

What are these laws asking for digital mental health regulations?

- Clarity: Are users chatting with AI or a human? The line must be obvious.

- Consent: Apps must clearly explain what data is being collected and why.

- Accountability: No more hiding behind ‘we’re just a tech platform.’ If you’re dealing with emotions, you’re in healthcare territory.

- Mental health data privacy: Stored securely, never sold, and must comply with HIPAA/GDPR equivalents.

The Problem With Unregulated AI Therapy Tools

- Chatbot psychosis: Some people genuinely believed their bot was alive and sentient. That’s not cute; that’s a liability.

- Harmful advice: Bots giving dangerous suggestions for depression or suicidal thoughts have triggered real-world consequences.

- Ethics gaps: A lot of tools are trained on biased, incomplete, or outdated data.

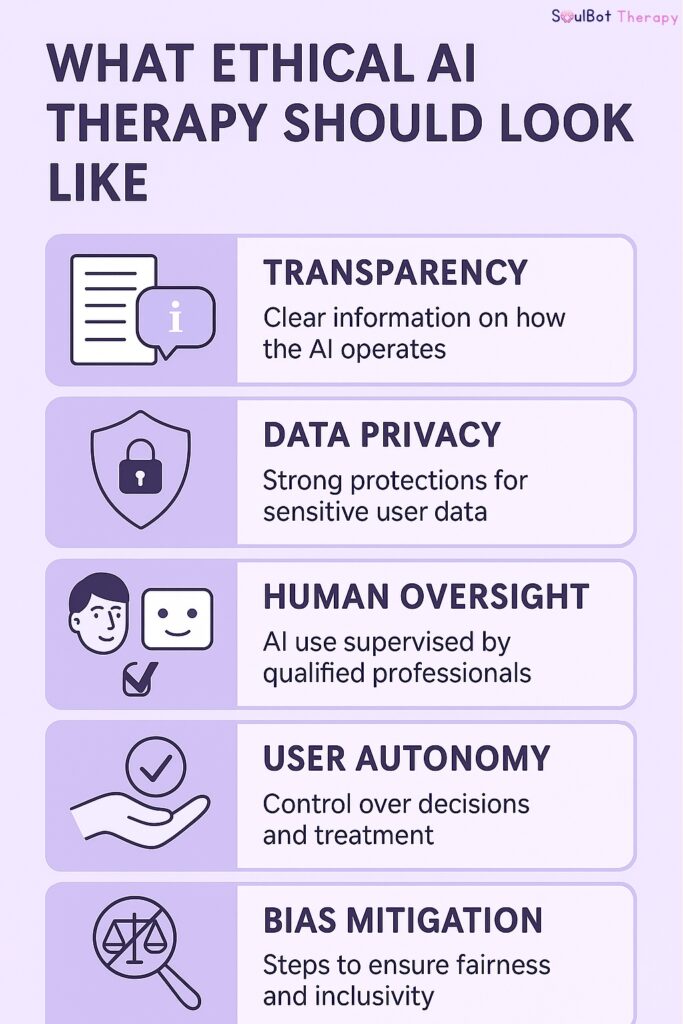

What Ethical AI Therapy Should Look Like?

Let’s be real, tech can help with mental health, but it needs serious boundaries:

- Always identifies itself as AI.

- Give disclaimers for medical vs emotional support.

- Store data ethically and securely

- Have emergency protocols

🧠Fact: In the U.S., the FDA is updating its digital health guidance to include AI-based behavioral therapy tools. These changes aim to standardize how mental health apps are classified, especially those that claim therapeutic outcomes.

Where SoulBot Stands in digital mental health regulations?

At SoulBot, we saw this coming.

- Built-in privacy-first architecture (we don’t sell data. Period.)

- Users are told when they’re talking to an AI.

- Support is guided by licensed frameworks and trauma-informed scripting.

- Encrypted mental health journaling tools

We’re not here to replace therapists. We’re here to bridge the gap when access, stigma, or money keep people from getting help.

Final Word: The Law Can’t Be Optional Anymore

Digital mental health regulations aren’t overreach; they’re necessary. Emotional safety is real healthcare. Apps that handle trauma, identity, stress, or depression need to act like it.

And soon, the law will force them to. But the good ones, the ethical ones, wouldn’t wait to be told.